RESHAPE HEALTH GRANT WINNER

Beyond the Surface: Bedsores early detection journey.

Rubitection is a healthcare platform designed for the early detection and care management of bedsores. It combines AI, a portable device, and a companion app to provide a holistic approach to wound care. This project focused on enhancing the app’s user experience, specifically optimizing the early detection and prevention flow to make it more accessible and intuitive for non-skilled caregivers at home. Throughout the process, we encountered several challenges and gained valuable insights from users and experts, which helped us refine the system to better meet the needs of its users.

Reshape Health Grant winners

The main reasons they won our Reshape Health Grant were the following:

Addressing a Critical Healthcare Challenge:

Rubitection is dedicated to tackling the often overlooked issue of bedsores, which are not only a significant health risk but also a considerable financial burden on the healthcare system. Their mission resonates with our objectives as they strive to break the deadly cycle of bedsores by empowering non-professional caregivers with the necessary tools to manage this complex problem effectively at home.

Innovative Use of Technology:

Their exploration into AI and computer vision for wound care management is exciting. They are at the vanguard of integrating advanced technology to help a general audience detect and prevent potential wounds at an early stage. This approach not only has the potential to save substantial amounts in healthcare costs but also to save lives by preventing the progression of bedsores.

Promoting Health Equity:

Rubitection’s bedsores RAS system advances health equity by addressing key barriers such as transportation issues, mobility challenges, and caregiving responsibilities, enabling timely treatments and improving health outcomes. By helping with healthcare directly in patients' homes, Rubitection eliminates the need for travel, reduces missed appointments, and ensures consistent care management, especially crucial for conditions like bedsores that require regular monitoring and intervention. This model significantly enhances accessibility and effectiveness, making quality healthcare achievable for those traditionally underserved by the healthcare system.

Tackling the complexity of bedsores

Bedsores, or pressure ulcers, are a critical issue often overlooked by insurance and clinicians.

When patients are discharged with open wounds, their families can feel overwhelmed by the extensive care required to prevent the wound from worsening and to avoid the development of new wounds.

Families can’t always rely on medical help from doctors and nurses since they are not always available to visit and review patients' recovery processes.

The management of a patient with an open wound presents a dual challenge.

First, there is a critical need to consistently monitor the wound's healing process, ensuring it progresses towards complete recovery or at least doesn't worsen. Effective care management and continuous treatment are vital to this end.

Secondly, patients confined to a bed or wheelchair require regular assessments to prevent the onset of new injuries. Early detection is crucial in both managing existing conditions and preventing the development of additional complications.

The Rubitect Assessment System (RAS) aims to tackle these problems. A low cost hand held device and a mobile companion app are combined with computer vision capabilities to assist the low skilled caregiver for early detection, while the whole system and monitoring platform help them and the patients family to manage wounds efficiently.

This case study will concentrate on the risk assessment and early detection of wounds user flow, highlighting the importance of promptly identifying and treating wounds to prevent them from worsening, which can lead to serious health complications and expensive treatments.

Given that this is a consumer product, a primary UX challenge was to develop a system that is accessible and requires minimal training, enabling anyone in any care setting to effectively use it.

Early Detection Journey

Guiding caregivers to detect early signs of pressure ulcers is crucial. Visual inspections can lead to assessment and treatment errors, especially with low-skilled caregivers. Our challenge was to guide the user through the skin pressure points, where signs of a wound might appear:

Guide the user to each potential wound site.

Take a picture with the device’s camera.

Assess wound characteristics

Scan the skin with the hardware device

Get the preliminary results and instructions.

Each of these steps represented challenges for the user interface.

Where to start?

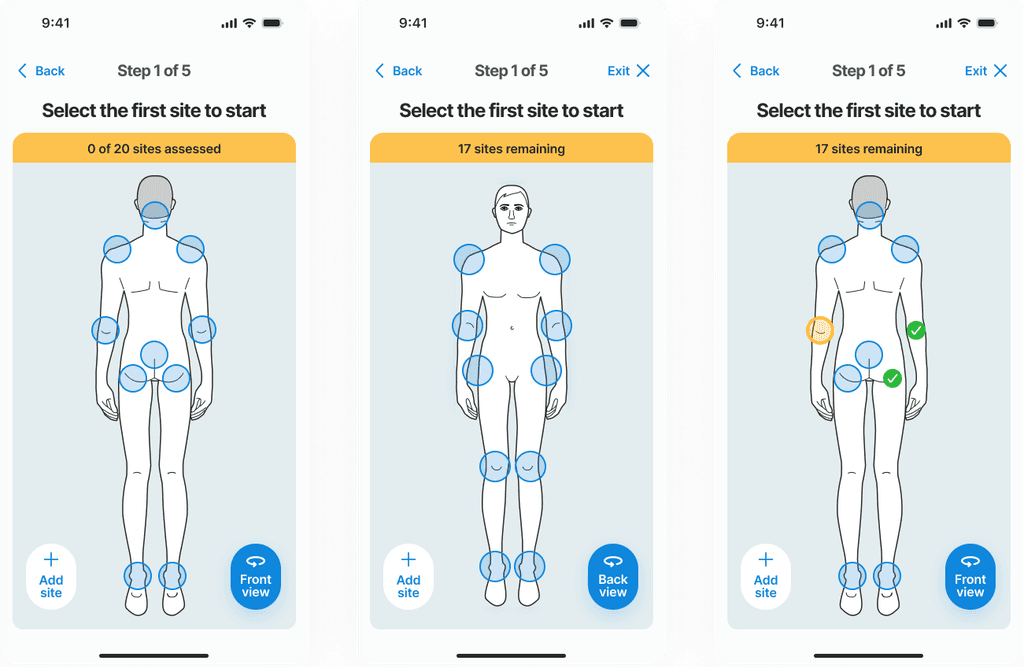

A map of the body

A body map is essential for guiding users on where to check for potential wounds. Before taking a picture, users must understand which areas of the body are most susceptible to pressure ulcers, typically where the skin is pressed against prominent bones. To assist users in identifying these high-risk areas, a wizard along with a detailed body map is provided, clearly highlighting points that are more prone to developing wounds.

Challenges appeared with this type of approach, where a two-sided body hides information, and how hip position on the side can be presented on either back or front view.

The highlighted feedback from a test user regarding the body map guide reveals an important oversight in the initial design. During the test interview the user pointed out that their patient had developed a pressure ulcer on the hands—an area not listed in the body map as a common site for such wounds. This observation indicates that our current model does not account for all possible scenarios where pressure ulcers can occur, suggesting that less typical sites can still be at risk under certain conditions.

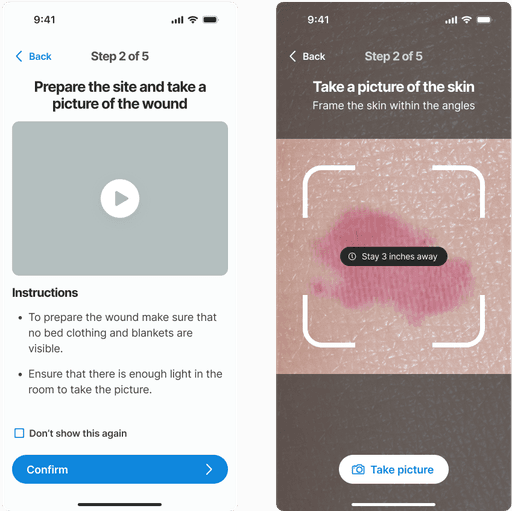

Assessing the skin

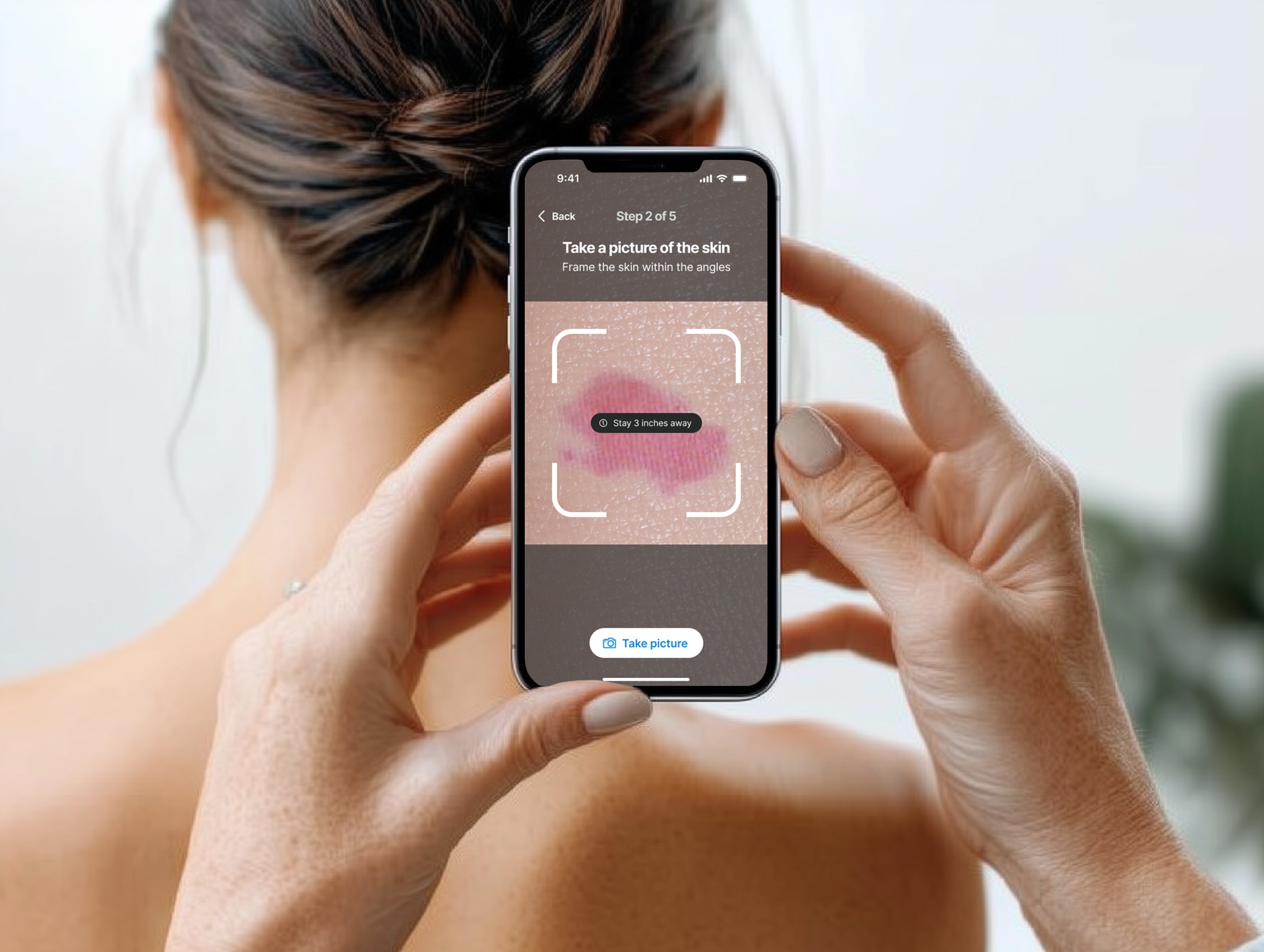

To provide consistent images to the AI model, it was important to set some parameters to take pictures with certain framing and distance, avoiding noise and other objects to the image.

This crucial step involves analyzing images captured by users, enhancing the precision of assessments beyond what the human eye or an inexperienced user can achieve.

1 - Visual analysis: Taking a picture.

Rubitection utilizes computer vision capabilities to help identify potential wounds by simply taking a picture of the skin. Once the skin site is located, the user takes a picture of that body area and starts the assessment cycle.

2 - A few questions to add more context

The next step in the process involves a guided, wizard-like assessment where the user is prompted to provide visual and olfactory information about the skin. This step is crucial because, while the AI and device capabilities play a significant role in identifying and assessing potential wounds, human input is invaluable for a comprehensive evaluation.

Users are not asked to diagnose but to contribute observations that can enhance the accuracy and context of the assessment.

Users answer structured questions about how the skin looks and smells. For example, they might be asked if there is any discoloration, unusual markings, or a noticeable odor, which are all indicators that could suggest infection or other skin complications.

The solution of a wizard like questionare worked well in the user testing sessions. A reachable single button approach helped users answer one handed, make it suitable for the busy care setting..

Some questions should include a third option, such as "I don't know," to accommodate situations where a patient might be unable to express pain or discomfort, a need highlighted during our research interviews

3 - Scanning with the RAS Device

The final critical task in the risk evaluation process involves using the device to analyze skin firmness and temperature. These characteristics are not easily detected or measured through human assessment alone, but they provide accurate and reliable data essential for quantifying risk.

The hardware probe needs to be paired with the app to ensure the user can fully assess the targeted skin area. Having the device ready to use and how to use it was the main focus for the user interface.

During the sketching phase, we explored various ideas on how to provide immediate feedback to ensure that the device fully covered the targeted skin area. While this feature presented testing challenges and was not included in the final user testing, it remains a valuable concept for future development to enhance the accuracy and user experience of the device.

Results and recommendations

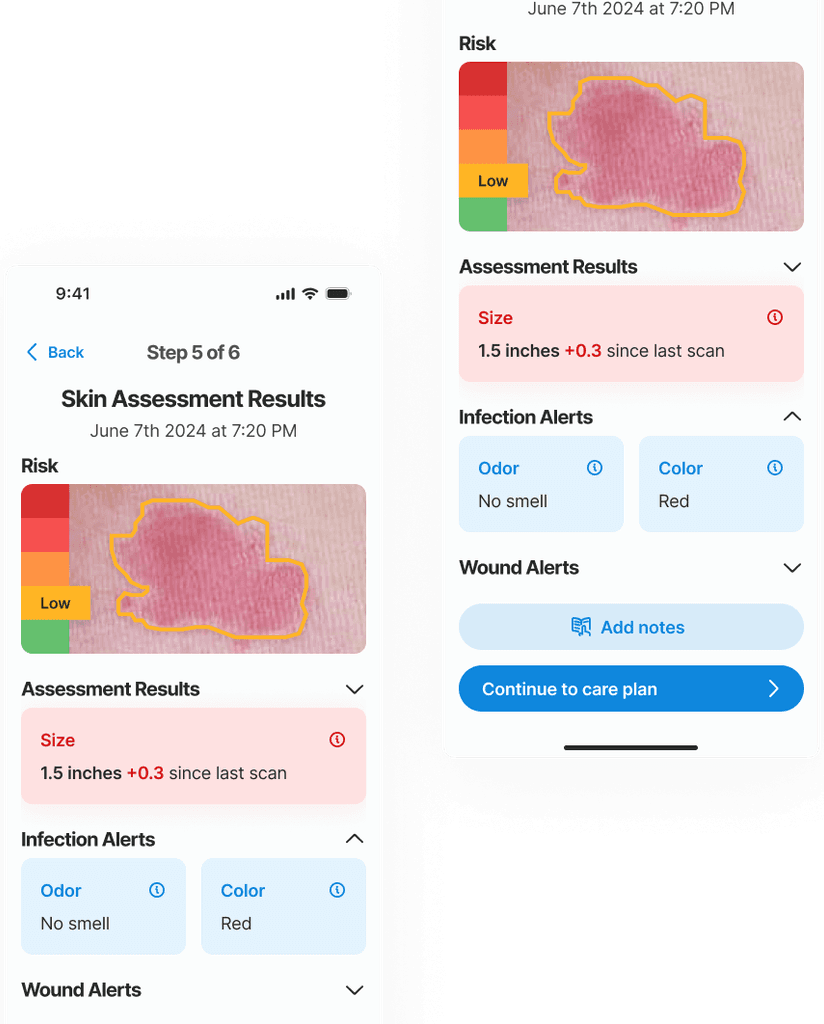

After gathering all necessary inputs, the non-skilled caregiver needs clear, actionable directions to manage the potential wound and prevent it from worsening. The results are presented first, providing crucial information about the risk level and severity. Following this, a comprehensive care plan is presented with detailed instructions on the next steps to effectively prevent the wound from worsening and to promote healing.

In this example an early stage (low risk) wound was found. The risk level is identified with a color known color pattern (green-yellow-red)

Assesment results

The system is ready to provide results on the skin condition using 3 layers of information:

Visual input of the skin color, texture and size with the Picture.

Sensory information of the patient overall skin condition with the Wizard.

Objective quantitative data aquired with the Device.

The main outcome at this step is to inform the risk level of the skin site and give a summary of the information provided in the previous steps, thereby informing and educating the caregiver.

The borders of the wound and its stage are determined by an AI algorithm that leverages computer vision, trained on a multitude of images. The size of the wound is also automatically calculated by the AI. Tracking the size changes from the initial appearance is crucial for accurately monitoring the wound's evolution and guiding future treatment strategies.

The staging of the wound goes from Stage 1 to Stage 4, and is an important data point for the physicians and the medical records.

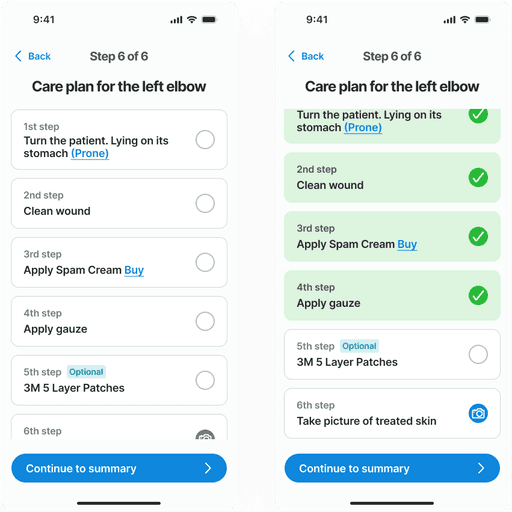

Care plan

The care plan is a structured step-by-step guide to assist caregivers in managing the wound. It presents a prioritized list of tasks that need to be completed in sequence. Each step can be marked as completed to track progress and ensure that all necessary actions have been performed. This feature aids in maintaining the order and priority of the care steps, ensuring that the treatment protocol is followed correctly.

Feedback

During the user validation phase, we uncovered key insights about this screens.

Some users expressed concern about the feasibility of completing all prescribed tasks immediately following a body scan. They emphasized the importance of being able to mark tasks as completed and having the flexibility to return to the list at a later time. This feedback highlighted the need for the app to provide an simple way to remind them about this pending tasks.

Another user -with firsthand experience managing bedsores- suggested the necessity for more flexibility in the treatment options provided by the app. They recommended incorporating the ability to customize or choose alternative creams and bandages, reflecting the varied preferences and potential needs of different users.

After attending to one site, the skin assessment process moves on to the next body site, repeating the cycle until the entire body has been examined. Since the application is designed for use by multiple caregivers, this task can be paused and later resumed by another user. This feature accommodates varying shifts typical in care facilities and homes.

Bedsores on black skin

There is a significant challenge in detecting early-stage bedsores on darker skin tones. Black skin does not exhibit the typical redness and subtle color changes easily identifiable on lighter skin, making even trained professionals struggle with early detection. AI technology proves invaluable here, enhancing the ability to detect subtle variances in skin condition that human eyes might miss.The value of reminders

Interviews with nurses and caregivers have taught us about the crucial role that routines and reminders play in effective wound care. Specifically, they highlighted the importance of regularly changing a patient's position to prevent the development of pressure ulcers. This insight led us to prioritize a notification system for the app, which now features prominently on the home screen and is tailored to support regular care tasks such as repositioning and changing bandages.

Through our collaboration with Rubitection, we worked to enhance the app’s user experience, making it accessible and straightforward for caregivers. Trough the design process, we refined the user interface to guide caregivers through each step of wound assessment, providing all the input data to finally deliver a care plan.

We encountered and overcame several challenges, such as optimizing the body map to include less common wound sites and ensuring the system's flexibility to accommodate varied user preferences and treatment options. Insights gained from extensive user and expert feedback were invaluable in iterating and refining the UI and improving the user experience.